Adverse Event Detection Calculator

Traditional monitoring misses 15-20% of adverse events. AI detection can identify 12-15% more cases than standard methods.

Detection Comparison

Every year, thousands of people are harmed by medications that seemed safe when they were approved. Some reactions don’t show up in clinical trials because those trials involve only a few thousand patients over a few months. Real-world use reveals problems that no lab test could predict - a rash that appears after six months, a heart rhythm issue in elderly patients taking two common pills together, or sudden liver damage in people with a rare genetic variant. Until recently, catching these issues meant waiting for doctors to report them, sifting through stacks of paper forms, and hoping someone noticed a pattern. Now, artificial intelligence is changing all of that.

How AI Sees What Humans Miss

Traditional drug safety monitoring relies on voluntary reports from doctors, patients, and pharmacists. These reports are often incomplete, delayed, or buried in databases with no way to connect the dots. One patient reports nausea. Another reports dizziness. A third mentions a strange bruise. Individually, they mean little. Together, they might point to a dangerous interaction. AI doesn’t wait for humans to spot the pattern. It scans millions of records at once.

Systems like the FDA’s Sentinel Initiative analyze data from over 300 million patient records across hospitals, insurance claims, and electronic health records. Machine learning models look for unusual spikes in symptoms tied to specific drugs - even if those symptoms were never documented as side effects before. Natural language processing (NLP) tools read doctor’s notes, patient forums, and social media posts to pull out hidden clues. A 2025 study by Lifebit.ai found that AI detected 12-15% of adverse events that would have gone completely unnoticed using old methods.

One real example: In early 2024, a new anticoagulant was launched. Within three weeks, an AI system flagged an unusual number of patients developing severe bleeding after taking the drug along with a common antifungal. The interaction hadn’t been seen in trials because the antifungal was rarely prescribed to the same group of patients. By the time human reviewers caught it, the AI had already flagged over 200 cases. That’s not luck - it’s pattern recognition at scale.

The Data Behind the Detection

AI doesn’t work in a vacuum. It needs data - lots of it. Modern pharmacovigilance systems pull from six major sources: electronic health records (EHRs), insurance claims, spontaneous adverse event reports, scientific literature, social media, and even wearable devices. Each source adds a piece of the puzzle.

Wearables, for instance, now track heart rate, sleep patterns, and activity levels. If a patient starts taking a new blood pressure drug and their nighttime heart rate suddenly spikes every day at 3 a.m., that’s a signal. A human might never notice. An AI system can correlate that with thousands of other similar cases across different hospitals.

But data quality matters. If a hospital’s EHR system uses inconsistent terminology - calling the same drug by three different names, or missing key patient demographics - the AI will struggle. That’s why 35-45% of implementation time for these systems goes into cleaning and standardizing data. Without clean input, even the smartest algorithm gives bad output.

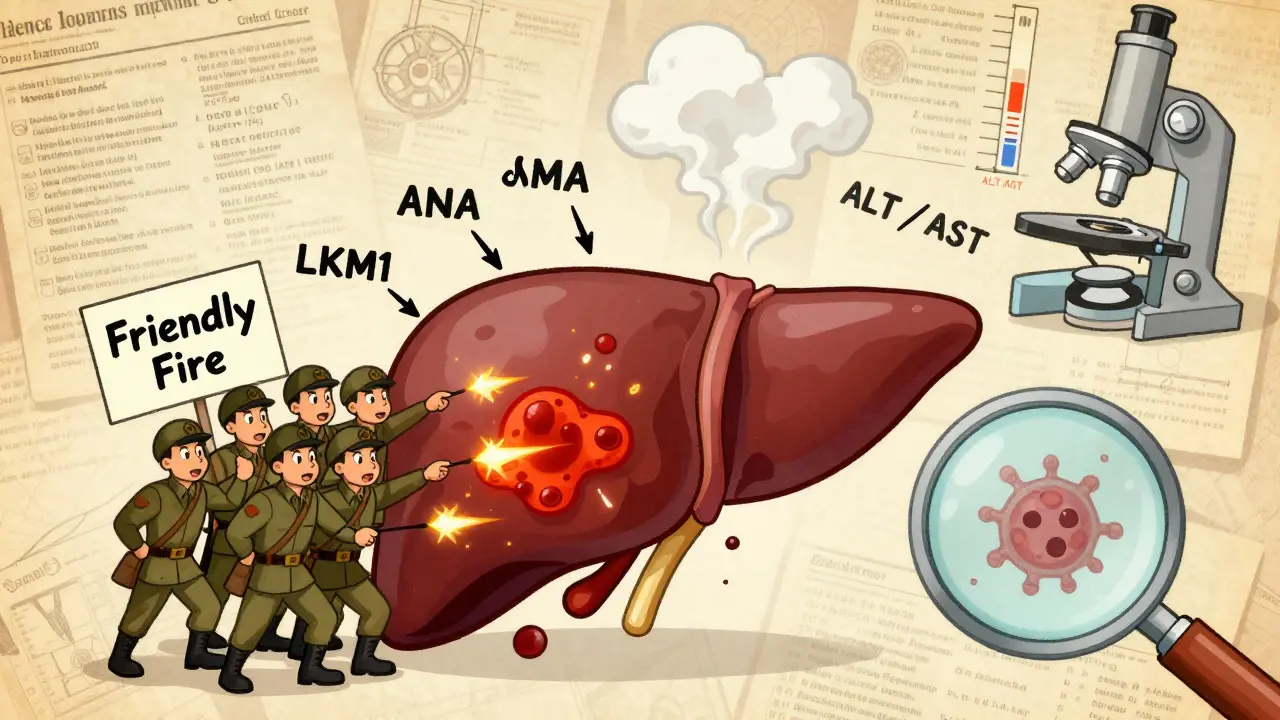

And then there’s the problem of bias. Most health data comes from people who have access to care - middle-class, urban, insured patients. That means AI systems may miss risks affecting rural communities, low-income groups, or people of color whose symptoms are underreported or misdiagnosed. A 2025 analysis in Frontiers showed that AI missed a dangerous interaction in a diabetes drug because the training data had almost no records from Medicaid patients. The algorithm didn’t “know” to look for it because it had never seen enough cases.

Speed: From Weeks to Hours

Before AI, detecting a safety signal could take weeks - sometimes months. A company would get a few reports, compile them, send them to a team, wait for a review, then decide whether to investigate further. By then, hundreds or thousands of patients might have been exposed.

Today, AI can scan incoming reports in real time. The FDA’s Sentinel System has processed over 250 safety analyses since its full rollout. One drug, approved in January 2024, had its first safety signal detected in just 47 hours. That’s faster than most hospitals can schedule a follow-up appointment.

Speed isn’t just about saving time - it’s about saving lives. When a new antibiotic was linked to a rare but fatal liver condition, AI flagged it within days. The FDA issued a warning before the drug reached small-town pharmacies. Without AI, the warning might have come six months later - after dozens of deaths.

Where AI Still Falls Short

AI is powerful, but it’s not perfect. It’s great at spotting patterns - but terrible at explaining why they matter. A system might say, “Patients on Drug X and Drug Y have a 40% higher risk of kidney injury.” But it can’t tell you whether it’s the combination, a genetic factor, or a missing vitamin. That’s where human experts come in.

Pharmacologists, clinicians, and toxicologists still need to interpret AI findings. The European Medicines Agency (EMA) made this clear in March 2025: AI tools must include transparent documentation showing how they reach conclusions. No black boxes. No “trust us” algorithms.

Another limitation: AI can’t replace clinical judgment. A patient might report fatigue after taking a new medication. AI flags it. But is it the drug? Stress? Sleep apnea? Depression? Only a doctor can tell. AI gives you the question. You still need to answer it.

And then there’s the integration problem. Many pharmaceutical companies still use 20-year-old safety databases that weren’t built for AI. Connecting modern AI tools to legacy systems can take 6-9 months and cost millions. A 2025 survey found that 52% of pharmacovigilance teams cited this as their biggest hurdle.

Who’s Using This Tech - and How

Big pharma leads the charge. Of the top 50 pharmaceutical companies, 68% have implemented AI in their drug safety programs as of early 2025. Companies like GlaxoSmithKline, Pfizer, and Merck use AI to monitor their own drugs in real time. IQVIA, one of the largest contract research organizations, runs AI-powered safety systems for 45 of those top 50 companies.

Smaller firms can’t afford to build their own systems. But they can buy access. Vendors like Lifebit offer cloud-based platforms that process over 1.2 million patient records daily. For $200,000 a year, a small biotech can get the same monitoring power as a Fortune 500 company.

The FDA isn’t just watching - it’s guiding. Its Emerging Drug Safety Technology Program (EDSTP), launched in 2023, works directly with companies to test new AI tools before they go live. In April 2025, the FDA started three pilot programs testing AI across five million patient records from diverse health systems. The goal? To build standards so every AI tool meets the same baseline for accuracy and fairness.

The Future: From Detection to Prevention

The next wave of AI in drug safety won’t just detect problems - it will prevent them. Researchers are already training models to predict who’s at risk before they even take a drug. By combining genomic data, lifestyle factors, and medical history, AI can now estimate a patient’s personal risk for certain side effects.

Seven major academic medical centers are testing this right now. One system, in development at Stanford, uses a patient’s DNA to predict whether they’ll have a bad reaction to a common painkiller. If the risk is high, the system flags it for the prescriber before the prescription is filled. Early results show a 70% reduction in adverse events for high-risk patients.

Another frontier is causal inference. Right now, AI finds correlations - Drug X and symptom Y happen together. But are they linked? Or just coincidence? New models using counterfactual analysis (what would have happened if the patient hadn’t taken the drug?) are starting to answer that. Lifebit’s 2024 breakthrough improved causal accuracy by 22.7% - meaning fewer false alarms and more real risks caught.

By 2027, experts predict AI will distinguish coincidence from causation 60% better than today. That’s not just an upgrade - it’s a revolution.

What This Means for Patients

For you, the person taking the medication, this means safer drugs and faster warnings. If you’re on a new prescription, there’s a better chance your doctor will know about hidden risks - even ones that haven’t been officially labeled yet.

It also means more personalized care. In the near future, your pharmacist might get an alert: “Patient has genetic variant CYP2D6*10 - avoid this drug.” That’s not science fiction. It’s already happening in pilot clinics.

But it also means you need to be part of the system. If you have a side effect, report it. Use the app. Call your doctor. AI can’t detect what isn’t recorded. The more data we feed in, the better it gets.

Final Thoughts

Artificial intelligence isn’t replacing pharmacovigilance professionals. It’s giving them superpowers. The best systems today combine machine speed with human insight. AI finds the needle in the haystack. The expert decides if it’s a safety hazard or just a stray thread.

The technology is here. It’s working. And it’s getting better every day. The question isn’t whether AI belongs in drug safety - it’s how fast we can make sure it’s fair, transparent, and available to everyone who needs it.

Vu L

AI detecting drug side effects? Sure, let’s just hand over our lives to a black box that doesn’t even know what ‘fatigue’ means in context. Last time I checked, my grandma’s ‘weird bruise’ was from her cat, not a new pill. This isn’t progress-it’s laziness dressed up in tech jargon.

James Hilton

AI spots a rash? Cool. Now let’s see it explain why that rash only happens on Tuesdays. 😏

Mimi Bos

i just read this and thought about my cousin who had a bad reaction to a med and no one noticed until she ended up in the er. ai might not be perfect but if it helps even a little? count me in. 🙏

Payton Daily

Think about it-human minds are limited. We see trees. AI sees the forest, the soil, the wind, the ghosts of forgotten clinical trials whispering in binary. This isn’t just medicine-it’s the next stage of human evolution. We’re becoming symbiotic with machines. Some call it scary. I call it destiny. 🌌

Kelsey Youmans

While the technological advancements described are undeniably impressive, it is imperative that we maintain rigorous ethical oversight and ensure equitable access to these systems. Without deliberate intervention, algorithmic bias may exacerbate existing disparities in healthcare outcomes.

Sydney Lee

Let’s be honest: if you’re not using AI to monitor drug safety, you’re not just behind-you’re negligent. And no, ‘but my hospital uses old software’ isn’t an excuse. It’s a moral failure. We have the tools. We have the data. The only thing missing? Willingness to do what’s right.

oluwarotimi w alaka

usa and big pharma using ai to spy on us? yeah right. they dont care about safety-they care about lawsuits. this ai thing? just a way to make you think they’re doing something while they keep selling poison. and dont get me started on how they only use data from rich people. its all a scam.

Debra Cagwin

This is such an important step forward. Every person who reports a side effect-even if it seems small-is helping save lives down the line. Thank you to everyone who speaks up. And to the teams building these systems: you’re doing incredible, quiet work. Keep going.

Hakim Bachiri

AI?!?!?!?!? We’re letting computers decide who lives and who dies?!?!? Next thing you know, they’ll be telling doctors what to write in their notes. And don’t even get me started on how these systems are trained on data from people who can afford to go to the doctor-what about the rest of us?!?!? This isn’t innovation-it’s elitist garbage with a fancy name!

Celia McTighe

OMG I just had this happen to my mom!! She took that new anticoagulant and got a weird bruise-then the doctor called and said, 'Hey, we saw a pattern online, so we pulled you off it.' 🥹 I cried. Like, actually cried. This tech? It’s not magic-it’s love in code. ❤️

Ryan Touhill

It's fascinating, isn't it? The irony of relying on machine learning to detect the very human consequences of pharmaceutical intervention. We've outsourced vigilance to algorithms, yet still demand human accountability. A paradox wrapped in a firewall, secured by a compliance officer who probably doesn't understand neural networks. The future is here-and it's beautifully, terrifyingly inconsistent.